Radio needs YOU!

Radio is critical infrastructure that relies on volunteers and communities. Recent events on the Gold Coast prove radio's value when centralised communications fail.

From LoRaWAN flood sensors to AI-powered image sorting, these are the rough notes from the workbench. Field tests in paddocks and basements, small utilities we build for ourselves, and the lessons that come from wiring real hardware into real places — shared in case they’re useful for whatever you’re building next.

Radio is critical infrastructure that relies on volunteers and communities. Recent events on the Gold Coast prove radio's value when centralised communications fail.

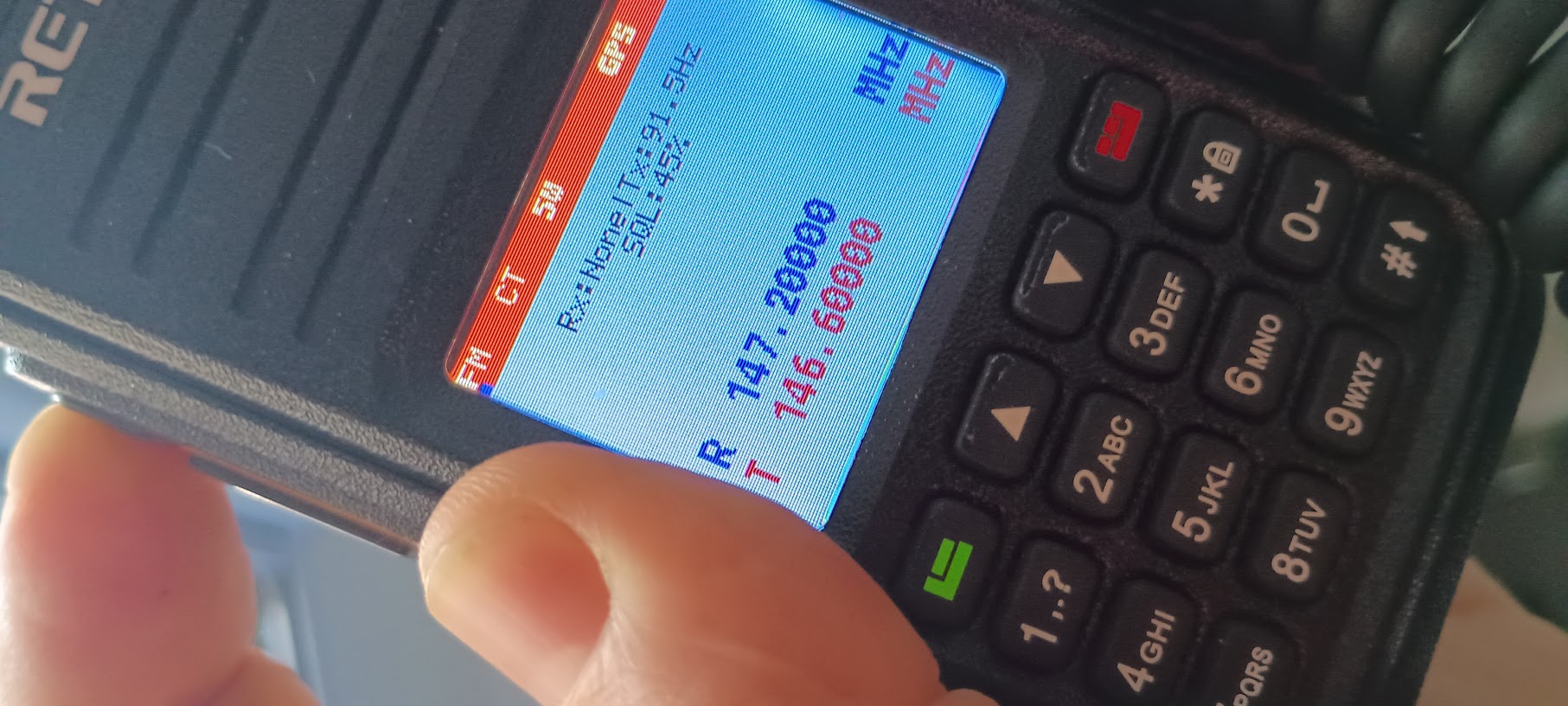

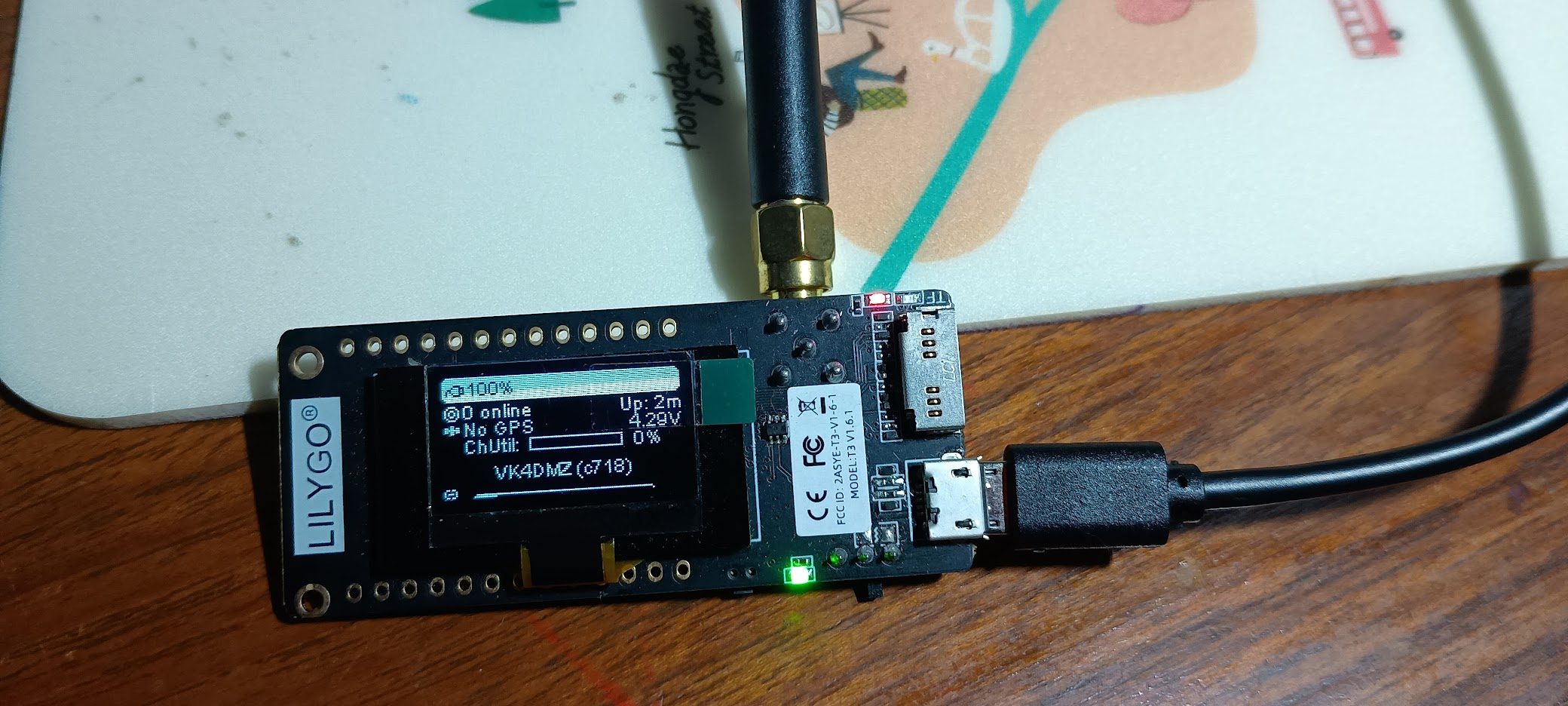

A practical how-to for running Meshtastic on 433 MHz with a TTGO LoRa board, using your amateur radio callsign and ham mode settings.

I built a small open-source tool called Screenshot CLI. It does exactly what the name suggests – takes screenshots from the command line. It's written in TypeScript and uses Playwright under the hood...

Exploring open-source mesh networking solutions...

Exploring why Australia is an ideal environment for IoT innovation...

Understanding Cat 1bis and its applications in IoT...

Recap of UnrealFest24 and game development insights...

Migrating from Confluence to WikiJS for better documentation...